Our major contributions include:Īs Kang et al. In this paper, we present MMap, a fast and scalable graph computation method that leverages the memory mapping technique, to achieve the same goal as GraphChi and TurboGraph, but through a simple design. In such graphs, information about a high-degree node tends to be accessed many times by a graph algorithm (e.g., PageRank), and thus is cached in the main memory by the OS, resulting in higher overall algorithm speed. This caching feature is particularly desirable for computation on large real-world graphs, which often exhibit power-law degree distributions.

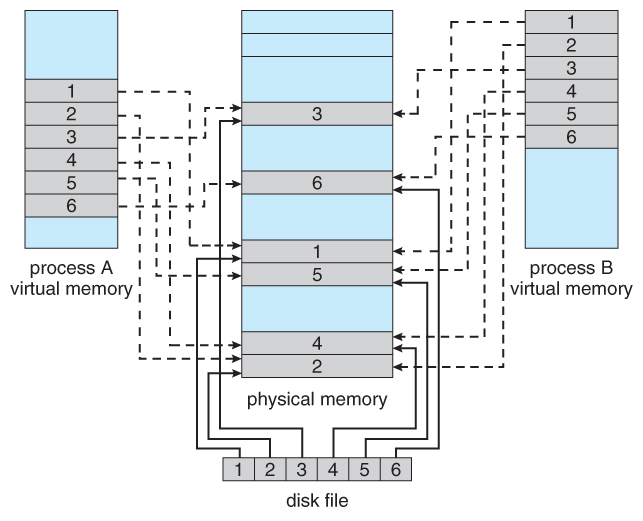

In addition, the OS caches items that are frequently used, based on policies such as the least recently used (LRU) page replacement policy, which allows us to defer memory management and optimization to the OS, instead of implementing these functionalities ourselves, as GraphChi and TurboGraph did. Memory mapping is a mechanism that maps a file on the disk to the virtual memory space, which enables us to programmatically access graph edges on the disk as if they were in the main memory. When analyzing these works, we observed that they often employ sophisticated techniques in order to efficiently handle a large number of graph edges, (e.g., via explicit memory allocation, edge file partitioning, and scheduling).Ĭan we streamline all these, to provide a simpler approach that achieves the same, or even better performance? Our curiosity led us to investigate whether memory mapping, a fundamental capability in operating systems (OS) built upon virtual memory management system, can be a viable technique to support fast and scalable graph computation. They focus on pushing the boundaries as to what a single machine can do, demonstrating impressive results that even for large graphs with billions of edges, computation can be performed at a speed that matches or even surpasses that of a distributed framework. Recent works, such as GraphChi and TurboGraph, take an alternative approach. However, distributed systems can be expensive to build, and they often require cluster management and optimization skills from the user. Distributed frameworks have become popular choices prominent examples include GraphLab, PEGASUS, and Pregel. Such graphs’ sheer sizes call for new kinds of scalable computation frameworks. Large graphs with billions of nodes and edges are increasingly common in many domains, ranging from computer science, physics, chemistry, to bioinformatics. We believe our work provides a new direction in the design and development of scalable algorithms. We contribute: (1) a new insight that MMap is a viable technique for creating fast and scalable graph algorithms that surpasses some of the best techniques (2) the design and implementation of popular graph algorithms for billion-scale graphs with little code, thanks to memory mapping (3) extensive experiments on real graphs, including the 6.6 billion edge YahooWeb graph, and show that this new approach is significantly faster or comparable to the highly-optimized methods (e.g., 9.5× faster than GraphChi for computing PageRank on 1.47B edge Twitter graph). We propose a minimalist approach that forgoes such requirements, by leveraging the fundamental memory mapping (MMap) capability found on operating systems. To achieve high speed and scalability, they often need sophisticated data structures and memory management strategies.

#JAVA MEMORY MAPPED FILE PC#

Graph computation approaches such as GraphChi and TurboGraph recently demonstrated that a single PC can perform efficient computation on billion-node graphs.

0 kommentar(er)

0 kommentar(er)